I’ve been asked recently where and how I host my blog, and while it’s simple to answer now that I’ve just completed an update to add authentication and discussion features, the truth is that in 12 months I’ll probably have forgotten it all again and I’ll be back learning all about Firestore rules and indexes once more.

So this post is as much for future me as it is for anyone else wondering about Firebase as a hosting platform.

Why Firebase? Link to heading

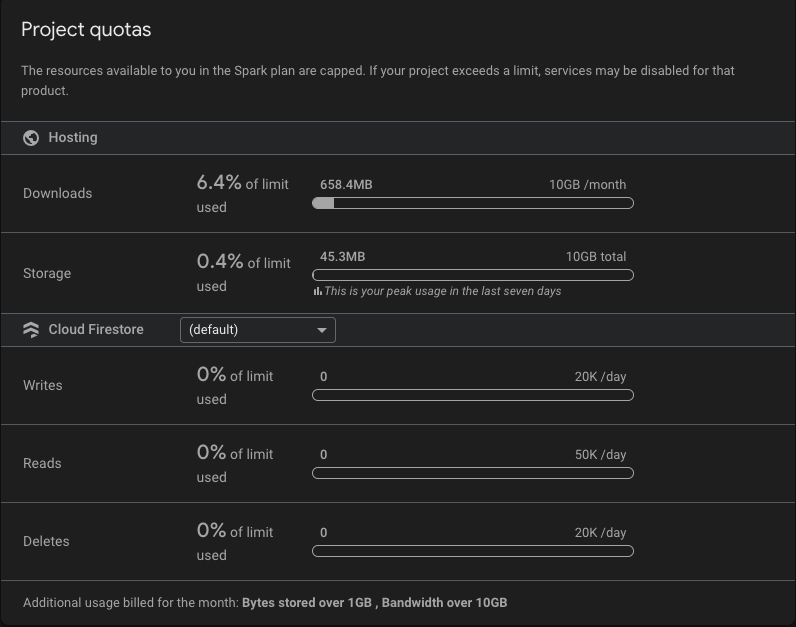

Firebase hosting dashboard

The main reason I chose Firebase is ease of use and having everything in one place with a dead simple dashboard. With Firebase’s Spark plan (the free tier), you get:

- Authentication - Complete user management system

- Cloud Storage - Object storage for files and images

- Firestore - NoSQL document database

- Hosting - Static site hosting with CDN

- Custom domain with HTTPS certificates that I don’t need to worry about

Until the blog starts using a few hundred MB of egress per day, it is, for all intents and purposes, free to host my code. The authentication is also free for under 50,000 Monthly Active Users (MAU), which is… optimistic for my blog traffic!

Note: If you plan to use Firebase Storage for protected content (as described below), you’ll need to upgrade to the Blaze plan (pay-as-you-go). While this removes the “completely free” aspect, the generous free tier limits mean you’re unlikely to incur charges unless you have significant traffic or storage usage.

The Static + Frontend Approach Link to heading

The key to keeping costs down is using static pages with frontend JavaScript. My Hugo site generates static HTML, CSS, and JavaScript, then the frontend handles dynamic features like comments and user authentication directly with Firebase.

All discussion functionality is handled with Firestore security rules rather than cloud functions, which means:

- No server-side code to maintain

- No cloud function execution costs

- Simpler architecture

- Direct client-to-database communication

Security: The Critical Piece Link to heading

Here’s where things get serious. Firestore security rules are absolutely critical because failing to understand them can expose sensitive and personal data to the wrong people, especially when configured in “sandbox” mode during development.

My current rules handle comment creation, updates, and a reporting system:

rules_version = '2';

service cloud.firestore {

match /databases/{database}/documents {

match /comments/{commentId} {

// Anyone can read comments

allow read: if true;

// Authenticated users can create comments

allow create: if request.auth != null

&& request.auth.uid == request.resource.data.authorId

&& request.resource.data.content.size() <= 1000

&& request.resource.data.content.size() > 0

&& request.resource.data.postPath is string

&& request.resource.data.deleted == false;

// Users can update their own comments (within 1 hour) or increment reports

allow update: if request.auth != null

&& (

// Author can edit their own comment within 1 hour

(request.auth.uid == resource.data.authorId

&& request.resource.data.content.size() <= 1000

&& request.resource.data.content.size() > 0

&& request.time < resource.data.timestamp + duration.value(1, 'h'))

// Any authenticated user can increment reports

|| (request.resource.data.reports == resource.data.reports + 1

&& request.resource.data.content == resource.data.content)

);

}

match /reports/{reportId} {

// Authenticated users can create reports

// Report ID must be in format: commentId_userId to prevent duplicates

allow create: if request.auth != null

&& request.auth.uid == request.resource.data.reporterId

&& request.resource.data.commentId is string

&& reportId == request.resource.data.commentId + '_' + request.auth.uid;

// Allow users to check if they've already reported

allow get: if request.auth != null

&& reportId == resource.data.commentId + '_' + request.auth.uid;

// Reports cannot be listed, updated or deleted

allow list, update, delete: if false;

}

}

}

What These Rules Prevent Link to heading

These rules include several anti-spam and security measures:

- Content length limits (0-1000 characters) prevent both empty and excessively long comments

- Author verification ensures users can only create/edit comments as themselves

- Time-based editing limits comment edits to 1 hour after creation

- Duplicate report prevention through structured report IDs

- Data integrity by validating required fields and types

The Dashboard Experience Link to heading

Firebase’s console is genuinely pleasant to use. You can monitor usage, check authentication logs, browse your Firestore data, and manage hosting deployments all from one interface. Coming from AWS, the simplicity is refreshing.

Protected Content with Firebase Storage Link to heading

One powerful feature I’ve implemented is using Firebase Storage with authentication to protect sensitive content. Instead of relying on client-side JavaScript to hide content (which can be bypassed), the content is stored in Firebase Storage and only accessible to authenticated users.

Storage Security Rules Link to heading

Firebase Storage uses similar rules to Firestore for access control:

rules_version = '2';

service firebase.storage {

match /b/{bucket}/o {

// Public files accessible to all

match /public/{allPaths=**} {

allow read;

}

// Protected content requires authentication

match /protected/{allPaths=**} {

allow read: if request.auth != null;

}

// Granular access control (if needed)

match /private/{userId}/{allPaths=**} {

allow read: if request.auth != null && request.auth.uid == userId;

}

}

}

Content Hydration Pattern Link to heading

The approach uses a clever hydration pattern:

- Static hosting serves a placeholder page for protected routes (e.g.,

/protected/document) - Client-side JavaScript detects the route and checks authentication

- If authenticated, fetches the actual content from Firebase Storage

- Replaces the placeholder with the protected content

This ensures that unauthenticated users - including bots and curl requests - never see the actual content, as it’s not part of the static site deployment.

Deployment Process Link to heading

My deployment is automated via GitHub Actions with protected content handling:

name: Build & Deploy to Firebase Hosting

on:

push:

branches:

- main

jobs:

build_and_deploy:

runs-on: ubuntu-latest

steps:

- name: Checkout

uses: actions/checkout@v4

with:

submodules: true

- name: Setup Hugo

uses: peaceiris/actions-hugo@v2

with:

hugo-version: "0.149.1"

extended: true

- name: Build

run: hugo --minify

- name: Prepare Protected Content for Upload

run: |

# Create upload directory for protected content

mkdir -p upload/protected

# Move protected files to upload directory

if [ -d "public/protected" ]; then

cd public/protected

for dir in */; do

if [ -d "$dir" ] && [ "$dir" != "/" ]; then

dirname=$(basename "$dir")

if [ -f "${dir}index.html" ]; then

echo "Moving ${dirname}.html to upload directory..."

# Move file to upload directory with .html extension

cp "${dir}index.html" "../../upload/protected/${dirname}.html"

# Create metadata file for TTL configuration

echo "30" > "../../upload/protected/${dirname}.ttl" # 30 days

# Remove the directory from public

rm -rf "$dir"

echo "✓ Moved ${dirname}.html and removed from public"

fi

fi

done

cd ../..

fi

- name: Deploy Hosting

uses: FirebaseExtended/action-hosting-deploy@v0

with:

repoToken: ${{ secrets.GITHUB_TOKEN }}

firebaseServiceAccount: ${{ secrets.FIREBASE_SERVICE_ACCOUNT }}

channelId: live

projectId: my-project

- name: Upload Protected Content to Firebase Storage

env:

GOOGLE_APPLICATION_CREDENTIALS_JSON: ${{ secrets.FIREBASE_SERVICE_ACCOUNT }}

run: |

# Configuration

PROJECT_ID="my-project"

STORAGE_BUCKET="gs://${PROJECT_ID}.firebasestorage.app"

# Authenticate with gcloud using service account directly

echo "$GOOGLE_APPLICATION_CREDENTIALS_JSON" | gcloud auth activate-service-account --key-file=-

gcloud config set project $PROJECT_ID

# Set CORS configuration for Firebase Storage

gsutil cors set storage.cors.json $STORAGE_BUCKET

# Upload files from upload directory to Firebase Storage

if [ -d "upload/protected" ]; then

echo "Uploading protected content to Firebase Storage..."

cd upload/protected

# Upload each file with appropriate cache control

for file in *.html; do

if [ -f "$file" ]; then

basename=$(basename "$file" .html)

echo "Uploading $file to Firebase Storage..."

# Upload file

gsutil cp "$file" "${STORAGE_BUCKET}/protected/$file"

# Set cache control based on TTL file

if [ -f "${basename}.ttl" ]; then

ttl_days=$(cat "${basename}.ttl")

ttl_seconds=$((ttl_days * 24 * 60 * 60))

gsutil setmeta -h "Cache-Control:public, max-age=${ttl_seconds}" "${STORAGE_BUCKET}/protected/$file"

fi

echo "✓ Successfully uploaded $file"

fi

done

cd ../..

fi

The workflow handles the entire build-deploy cycle, including moving protected content to Firebase Storage while keeping the main site static and fast.

Limitations and Considerations Link to heading

While Firebase hosting is excellent for static sites with dynamic features, it’s not suitable for:

- Server-side rendering (SSR)

- Complex backend logic (without cloud functions)

- High-bandwidth applications

- Applications requiring fine-grained server control

Conclusion Link to heading

Firebase hosting strikes the perfect balance for my blog: powerful enough to handle authentication and real-time discussions, simple enough that I might actually remember how it works in a year’s time, and free enough that I don’t worry about the bill.

The real magic is in the security rules though. Get them right, and you have a robust, scalable system. Get them wrong, and you might as well have left your database wide open to the internet.

Future me: you’re welcome for the documentation.